The Fetishization of the AI Oracle

03–23–2023

Ngozi Harrison

Introduction

There is an ideological investment in the idea that technology is neutral. However, true neutrality doesn't exist and typically this status quo or neutral position is one that is in service of power. This position reflects a Euclidean notion of spatiality, one that assumes absolute position and a top-down perspective. The notion of a "view from nowhere", an ideologically objective and removed position, should be challenged, as it assumes a single, solitary perspective from a static viewpoint. We must challenge this view, and respond with the world as round and space and position as relative. This turn from the Euclidean to the non-euclidean is a turn to an embrace of subjectivity. Many scholars and thinkers have advocated for this new understanding of spatiality as relative against the euclidean/cartesian understanding of objectivity and neutrality. For example, Hito Steyerl looks at the evolution of the perspective and its ties to modern GIS technology in her essay In Free Fall: A Thought Experiment on Vertical Perspective[1]. Everything comes with its own epistemological and ontological presuppositions, which is why, as abstract as it may seem it is necessary to investigate and critique these underpinnings. It is with this insight that we then began an investigation into the fetish object that is Artificial intelligence. We will return to these notions of spatiality at the end to see what a non-euclidean understanding space has to say about the ways in which AI is shaping and being shaped by our modern techno-culture.

Gilbert Simondon, French Philosopher and theorist in Science and Technology Studies, offers a speculative history of the technical which will be useful in this analysis. I first encountered this framework in a book I am currently working through, On the Existence of Digital Objects by Yuk Hui [2]. Simondon speaks of the bifurcation of magic into the religious and the technical, the technical is then further bifurcated into science (the theoretical) and technology (the practical). Practical can be further interpreted as in service of capitalism. In our modern, or perhaps post-modern, era the distinction between science and technology is in how rapidly discoveries are commodified. Artificial intelligence is interesting because it seems to bridge these bifurcations one by one. First bridging the bifurcation of the theoretical and the practical with a rather rapid appropriation of theoretical insights into practical application and commodified products. Many tech companies essentially function as ivory tower research centers funded by either ad companies or SaaS businesses. There is an almost semi-permeable membrane where discoveries diffuse from the highly theoretical to the practical to the consumer available. Next AI undoes the bifurcation of the religious and the technical bringing us back to an engagement with phenomenon that almost feels magical. The method of this bridge is obfuscation and opacity of operation. Magic is that which is not understood; where the substance and mode of operation are more than the human actors who participate in its rites. AI has taken on this nature both through marketing campaigns, function, and the way in which is it positioned within the culture—we are all implored not to look behind the curtain.

Artificial Intelligence as Fetish Object

In order to illuminate this more-than-human nature that AI has taken on, we can turn to Marx and more specifically the Marxist conceptualization of commodity fetishism[3]. Marx's concept of commodity fetishism seeks to illuminate the way in which under capitalism, commodities take on a life of their own apart from the material relations they are produced under and the labor that goes into their production. In order to more clearly explain, we must clarify two terms that have slightly different meanings here than their colloquial definitions: commodity and fetish. Commodity refers not necessarily to the commodities one might commonly think of such as coffee, oil, gold, etc. but to any product or service produced with the intention to make a profit. Fetish is not to be taken in a necessarily sexual context. Marx takes this term to mean a fetish object, something that is believed to have a special inherent power. Through the fetishization of commodities we obscure the social relations underlying their production, creating alienation.

My argument is that artificial intelligence has taken on the nature of a fetish object, meaning it has been imbued with these metaphysical properties, a substance that is beyond materiality and production. For example, Dall-e is a mysterious entity that you can ask to create art on command instead of the cumulative work of millions of artists on whose work the models were trained. The machine is the assembler and synthesizer, using increasing complexity and massive amounts of underlying data to obscure its source material.

Why are Tech Bros Losing Their Minds

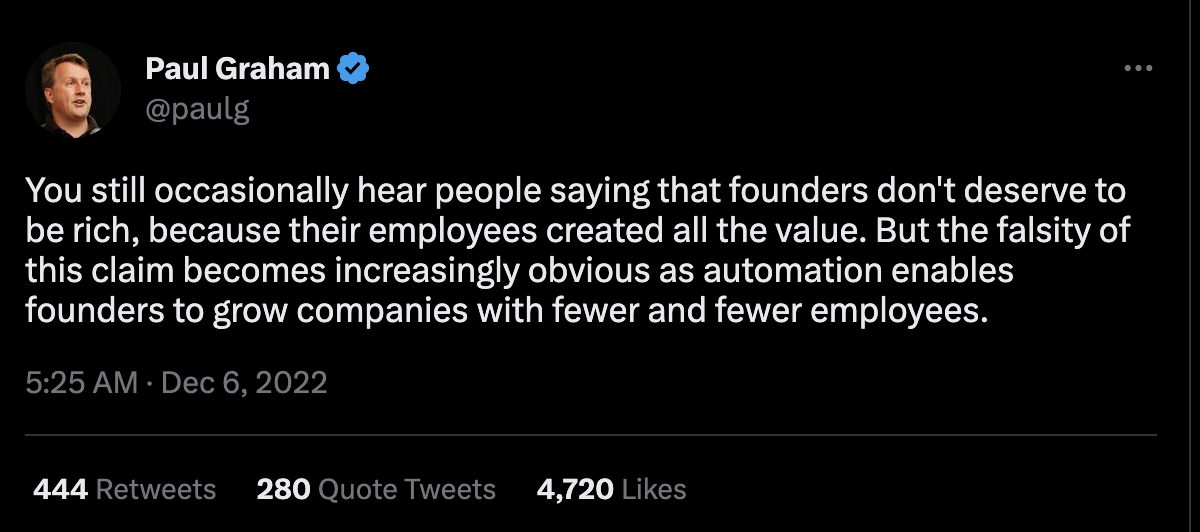

The evangelists and champions of AI would have us believe that AI will ultimately make work irrelevant and lead to the final triumph of capital over labor. Paul graham recently posted a Twitter thread asserting that "AI is inductive proof that Marx was wrong about his labor theory of value". Utilizing a very simplistic understanding of LTV that essentialized Marxist theory, he posits that AI shows why founders deserve to make considerably more money than their workers. In fairness, he did delete the tweet with reference to Marx but maintained, in fact, doubled down, on the point that founders can grow without many employees, hence they are not valuable. The incredulous nature of the very claims is pretty self-evident and really I won't spend time here disputing them but this provides a good opportunity to speak to the invisible labor that goes into AI and machine learning models.

AI and ML don't allow would be founders to completely expunge labor but essentially crowdsource labor for "free" or near free and leverage it to obtain the value. What we are talking about is simply labor that has been calcified into symbolic algorithmic representation and is abstracted away.

In a recent discussion between Ramon Amaro and Yuk Hui moderated by Rana Dasgupta when discussing the narrative that has become commonplace about AI taking "our" jobs, Amaro both cautions and investigates that term "our" [4]. For many the precarity felt and has existed and the jobs have already been taken. He speaks of the work done by often Black and Brown communities for Google to scan books for it's digital corpus of Google Scholar and Google Books. These jobs already no longer exist because their only value was to eventually disappear, with the dead labor paradoxically becoming invisible through opacity.

The Labor behind LLMs

Next, we will try to de-fetishize AI by examining the materiality and social relations that are behind a type of AI that has become a topic of conversation, large-language models or LLMs. For many people, ChatGPT came from nowhere but this is the culmination of scientific breakthroughs and corporate innovation projects going back to 2016. OpenAI was founded in a list of Silicon Valley veterans including Sam Altman, Jessica Livingstone, Peter Thiel, and Elon Musk. Sam Altman and Jessica Livingstone are both affiliated with Y combinator, former president and foudning partner respectively. OpenAI is interesting because is it technically a non-profit research lab but in 2019 after a $1 Billion investment OpenAI signed an exclusive deal with Microsoft to use the Azure platform. This year, Microsoft has taken steps to integrate ChatGPT into its Bing search as an interactive prompt synthesizing answers to search queries[5].

Where is the labor in this AI? When thinking about the labor that goes into these systems of automation we have to talk about what as Nick Dyer Witherford calls the new cyber-proletariat[6]. A class that enables the hardware and software we engaged with daily. For online platforms, there has been discussion around content moderators and support technicians who sit behind screens around the world, recent investigations into Facebook, Twitter, etc. have even brought this idea into the news cycle. However, with AI this seems a bit less obvious, perhaps because the entire point of the product as it is marketed is that by training on data, as long as the data is good and devoid of bias, less not more work is involved. A recent article in Time magazine breaks this paradigm and illuminates the way in which responsibility concerns don't just live at the sight of creating the algorithm or having an inclusive dataset (There is a question to be investigated here about whether inclusion is the radical solution many believe it to be. See Simone Browne's work for more on this[7]). Billy Perrigo, an investigative reporter at Time, found that OpenAI had outsourced to Kenyan workers paying them $2 a day to help the system become less racist and hence more marketable as a product[8]. This was done via a company called Sama which employs workers in Kenya, Uganda, and India. Sama speaks of itself as a Data annotation platform and one first AI companies to be certified as a B corporation. As a data annotation platform, the company employs data labelers to annotate data sets, purge hate speech, inappropriate language, etc. from datasets leveraged by models and also curate data. As a B corporation, Sama helps to uplift growing economies in the global south by providing tech-first jobs to create social impact. What Time further uncovers is the precarity of working conditions, abuses endured, and toxic content workers were exposed to–without mental health resources or organized labor. I would recommend reading the article and others linked in the sources below for a full picture of the situation many of these workers face. A key takeaway here is this is not an isolated incident but the current norm.

Sama is also currently fighting a lawsuit alongside Facebook brought by former content moderator Daniel Motaung who alleges encountering significant abuses and a toxic environment while working[9] ,the story has also been covered by Business Daily Africa [10]. This January, Sama announced that it would discontinue its work with Facebook and content moderation in general[11]. This is an ongoing case and the Kenyan Labor Courts recently ruled that despite Meta's arguments that Kenya does not have jurisdiction over the foreign corporation it will not strike Facebook from the case. In a recent article by Adrienne Williams, Milagros Micheli, and Timnit Gebru they refer to the ghost work, a coin termed by anthropologist Mary L. Gray and computational social scientist Siddharth Suri, that exists underneath these systems which run counter to the narrative of sentient intelligent systems[12][13].

Far from the sophisticated, sentient machines portrayed in media and pop culture, so-called AI systems are fueled by millions of underpaid workers around the world, performing repetitive tasks under precarious labor conditions. (Williams, Micheli, and Gebru)

Sama is only one component in a network of digital labor exploitation that lies underneath the surface of the online platforms we engage with daily and AI is no different, it is important to continue to unveil the practices behind these technologies to combat the view of AI as replacing labor.

What Does it mean to be Creative in the Age of AI

We are going to need to develop a more discerning eye towards creative content, what we engage with, and what is meaningful. The entrance of automation into the sphere complicates our relationship to art and creative. Is it for consumption or for being engaging and challenging. There is always going to be elevator music, hallway art, fluff pieces, and most importantly ads, art that helps to facilitate capitalism and consumption, this will be the lowest hanging fruit and the first thing to get automated.

Autospatialization and the generation of Techno-culture

"ChatGPT is a part of a reality distortion field that obscures the underlying extractivism and diverts us into asking the wrong questions and worrying about the wrong things." (Mcquillan)

We can see the effects of this extractivism in the way in which the serpent is beginning to eat it’s own tail. Articles about bad/incorrect answers from LLM chatbots get scraped and fed back into these very models to be regurgitated. For example, a few users on Twitter noticed if you searched the question "Should I Throw my car battery in the ocean?" on the newly chatgpt powered bing you would get the answer yes, pointing to two articles as sources[18]. Articles from 2021 which were documenting a similar phenomenon happening on Google ultimately pointing back to several Quora answers that had been written in jest[19]. Similar behavior has been observed with Google if you ask the question who invented running you would receive a featured snippet with the answer Thomas Running invented running in 1784 [20]. These examples are the result of featured snippet features in search engines and not the LLM chatbots that are being quickly added to Bing and Google but they do indicate a behavior that will only continue. One thing that may become an important feature we will begin to see integrated into these models is some form of citing sources. An example of this is perplexity.ai which not only gives the answer to a specific query but cites sources that were used to develop the answer. Recent product demos from Google's integration of Bard into search look like it may feature a similar feature when launched. This feature is a very specific UI design change that represents a different way of understanding what a source means in this context.

In a recent article in the New Yorker science fiction writer Ted Chiang offers a comparison between AI and the lossy compression of JPEGs, I highly recommend reading this article[21]. I want to offer another concept that gives us a way to frame this feedback loop that we are beginning to see between machinic generation and culture. Celia Lury, Luciana Parisi, and Tiziana Terranova discuss the concept of Auto-spatialization as a core component of what they term a topological turn in culture.

Auto-spatialization refers in Chatelet’s work to a changed relation between indices and that to which indices are supposed to point. In ‘classical’ mathematical calculation, he argues, a set of indices was neutral: indexation remained external to the development of calculation. Indices were operated as if notation was completely indifferent to that which it noted. In ‘contemporary’ calculation, he proposes however, notation is becoming concrete: indexation is no longer determined by an external ‘set’ (of numbers or data) but by a process of deformation in a surface that is itself in motion. Indexation is no longer reduced to the external evaluation of a collection or set, he says, but becomes ‘the protagonist of an experiment which secretes its own overflow’ (Chatelet, 2006: 40). What this suggests to us is that it is important to look at changes in the semiosis of contemporary culture, that is, changes in processes of abstraction and translation, of proportion and participation, ordering Lury et al. 13 and valuing, sensing and knowing. Our suggestion is that there is currently a transformation in the operation and significance of the indexical in contemporary culture (Lury, Parisi, Terranova)

Topology here refers to the mathematical concept of a topological space, encoding a kind of subjectivity and spatiality. Auto-spatialization refers to the ways in which the index or measurement inscribes itself on the surface of that which it seeks to measure, blurring the lines between the subject and the tool of observation. In my view, technological tools, data, measurement etc. have previsouly acted as these indices, providing measurement and cataloging of the world. Now they are producing output that is deforming the surface of culture. This is a line of scholarship and inquiry I hope to spend more time with to unveil the ways in which our modern techno-culture has taken a spatial turn and already is experiencing the continuous deformations and shaping of relations and culture by the algorithmic. How we contend with this shaping and deformation is an open question, but one that must be informed by a defetishization of the machines enacting these changes to bolster analysis and critique and ultimately reconceptualize how we engage with artificial intelligence.

Notes and Citations

[1] Hito Steyerl, The Wretched of the Screen (pub )

[2] Yuk Hui, On the Existence of Digital Objects (pub info),

[3] Stanford Encyclopedia of Philosophy - Karl Marx

[4] Yuk Hui and Ramon Amaro in conversation with Rana Dasgupta (YouTube)

[5] OpenAI - Wikipedia

[6] Nick Dyer Witherford, Cyber-Proletariat: Global Labour in the Digital Vortex (Digitial Barricades: Interventions in Digital Cutlure and Politics)

[7] Simone Browne, Dark Matters: On the Surveillance of Blackness

[8] Exclusive: OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic - Time Magazine

[9] Inside Facebook's African Sweatshop - Time Magazine

[10] Facebook parent firm fails to stop court case in Kenya - Business Daily Africa

[11] Under Fire, Facebook's 'Ethical' Outsourcing Partner Quits Content Moderation Work - Time Magazine

[12] The Exploited Labor Behind Artificial Intelligence - Noema magazine

[13] Mary L. Gray, Siddharth Suri - Ghost Work: How to Stop Silicon Valley from Building a New Global Underclass Hardcover

[14] Funding To Web3 Startups Plummets 74% in Q4 - Crunchbase News

[15] An Artificial Intelligence Developed Its Own Non-Human Language - The Atlantic

[16] Eduoard Glissant, Poetics of Relation pg 84

[17] We come to bury ChatGPT, not to praise it. - Dan Mcquillan

[18] AI is eating itself: Bing’s AI quotes COVID disinfo sourced from ChatGPT - Techcrunch

[19] Google Tells Search Users It’s a Good Idea to Throw Car Batteries Into the Ocean - The Drive

[20] Thomas Running - Collin Lysford

[21] ChatGPT Is a Blurry JPEG of the Web - New Yorker

[22] Celia Lury, Luciana Parisi, and Tiziana Terranova - The Becoming Topological of Culture

Notes